Situation

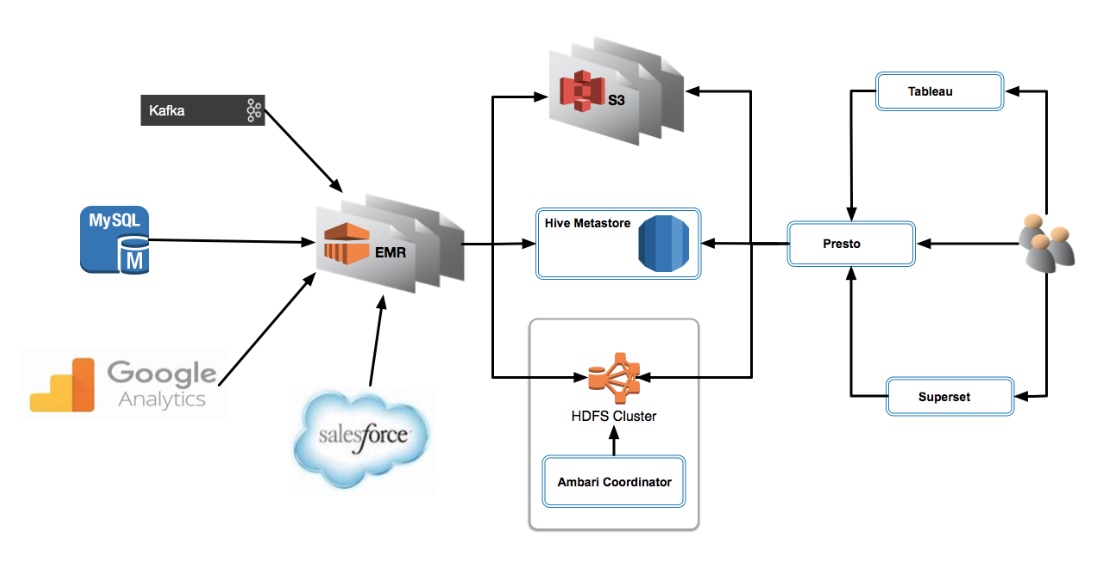

Eventbrite has been using MySQL since its inception as a company in 2006. MySQL has served the company well as an OLTP database and we’ve leveraged the strong features of MySQL such as native replication and fast reads, as well as dealt with some of its pain points such as impactful table ALTERs. The production environment relies heavily on MySQL replication. It includes a single primary instance for writes and a number of secondary replicas to distribute the reads.

Fast forward to 2019. Eventbrite is still being powered by MySQL but the version in production (MySQL 5.1) is woefully old and unsupported. Our MySQL production environment still leans heavily on native MySQL replication. We have a single primary for writes, numerous secondary replicas for reads, and an active-passive setup for failover in case the primary has issues. Our ability to failover is complicated and risky which has resulted in extended outages as we’ve fixed the root cause of the outage on the existing primary rather than failing over to a new primary.

If the primary database is not available then our creators are not creating events and our consumers are not buying tickets for these events. The failover from active to passive primary is available as a last resort but requires us to rebuild a number of downstream replicas. Early in 2019, we had several issues with the primary MySQL 5.1 database and due to reluctance to failover we incurred extended outages while we fixed the source of the problems.

The Database Reliability Engineering team in 2019 was tasked first and foremost with upgrading to MySQL 5.7 as well as implementing high availability and a number of other improvements to our production MySQL datastores. The goal was to implement an automatic failover strategy on MySQL 5.7 where an outage to our primary production MySQL environment would be measured in seconds rather than minutes or even hours. Below is a series of solutions/improvements that we’ve implemented since mid-year 2019 that have made a huge positive impact on our MySQL production environment.

Solutions

MySQL 5.7 upgrade

Our first major hurdle was to get current with our version of MySQL. In July, 2019 we completed the MySQL 5.1 to MySQL 5.7 (v5.7.19-17-log Percona Server to be precise) upgrade across all MySQL instances. Due to the nature of the upgrade and the large gap between 5.1 and 5.7, we incurred downtime to make it happen. The maintenance window lasted ~30 minutes and it went like clockwork. The DBRE team completed ~15 Failover practice runs against Stage in the days leading up to the cut-over and it’s one of the reasons the cutover was so smooth. The cut-over required 50+ Engineers, Product, QA, Managers in a hangout to support with another 50+ Engineers assuming on-call responsibilities through the weekend. It was not just a DBRE team effort but a full Engineering team effort!

Not only was support for MySQL 5.1 at End-of-Life (more than 5 years ago) but our MySQL 5.1 instances on EC2/AWS had limited storage and we were scheduled to run out of space at the end of July. Our backs were up against the wall and we had to deliver!

As part of the cut-over to MySQL 5.7, we also took the opportunity to bake in a number of improvements. We converted all primary key columns from INT to BIGINT to prevent hitting MAX value. We had a recent production incident that was related to hitting the max value on an INT auto-increment primary key column. When this happens in production, it’s an ugly situation where all new inserts result in a primary key constraint error. If you’ve experienced this pain yourself then you know what I’m talking about. If not then take my word for it. It’s painful!

In parallel with the MySQL 5.7 upgrade we also Upgraded Django to 1.6 due a behavioral change in MySQL 5.7 related to how transactions/commits were handled for SELECT statements. This behavior change was resulting in errors with older version of Python/Django running on MySQL 5.7

Improved MySQL ALTERs

In December 2019, the Eventbrite DBRE successfully implemented a table ALTER via gh-ost on one of our larger MySQL tables. The duration of the ALTER was 50 hours and it completed with no application impact. So what’s the big deal?

The big deal is that we could now ALTER tables in our production environment with little to no impact on our application, and this included some of our larger tables that were ~500GB in size.

Here is a little background. The ALTER TABLE statement in MySQL is very expensive. There is a global write lock on the table for the duration of the ALTER statement which leads to a concurrency nightmare. The duration time for an ALTER is directly related to the size of the table so the larger the table, the larger the impact. For OLTP environments where lock waits need to be as minimal as possible for transactions, the native MySQL ALTER command is not a viable option. As a result, online schema-change tools have been developed that emulate the MySQL ALTER TABLE functionality using creative ways to circumvent the locking.

Eventbrite had traditionally used pt-online-schema-change (pt-osc) to ALTER MySQL tables in production. pt-osc uses MySQL triggers to move data from the original to the “duplicate” table which is a very expensive operation and can cause replication lag. Matter of fact, it had directly resulted in several outages in H1 of 2019 due to replication lag or breakage.

GitHub introduced a new Online Schema Migration tool for MySQL (gh-ost ) that uses a binary log stream to capture table changes, and asynchronously applies them onto a “duplicate” table. gh-ost provides control over the migration process and allows for features such as pausing, suspending and throttling the migration. In addition, it offers many operational perks that make it safer and trustworthy to use. It is:

- Triggerless

- Pausable

- Lightweight

- Controllable

- Testable

Orchestrator

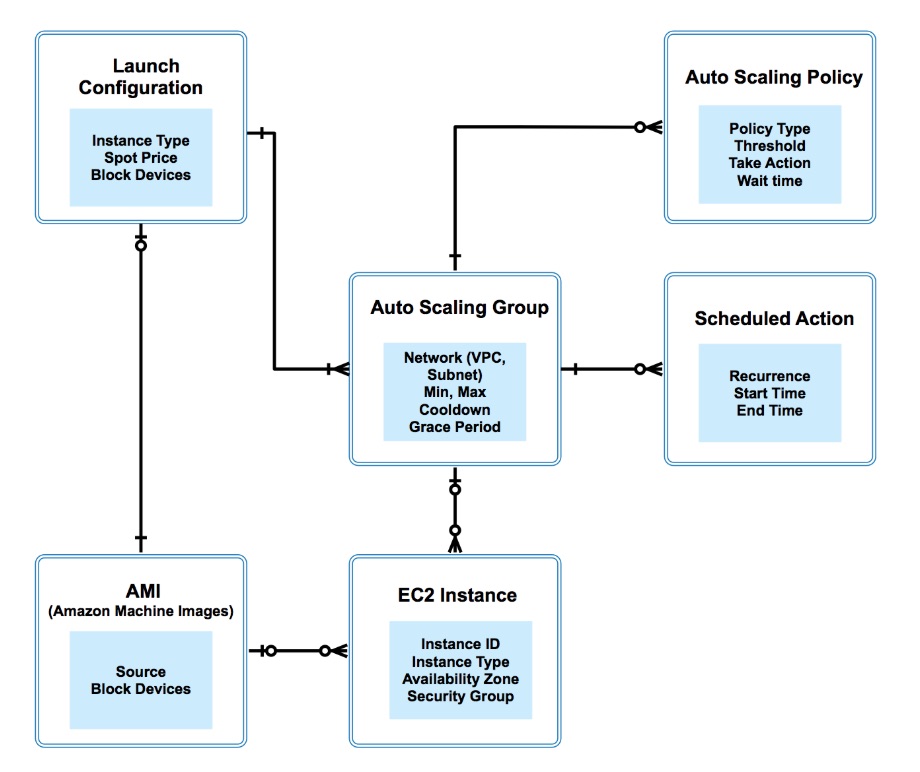

Next on the list was implementing improvements to MySQL high availability and automatic failover using Orchestrator. In February of 2020 we implemented a new HAProxy layer in front of all DB clusters and we released Orchestrator to production!

Orchestrator is a MySQL high availability and replication management tool. It will detect a failure, promote a new primary, and then reassign the name/VIP. Here are some of the nice features of Orchestrator:

- Discovery – Orchestrator actively crawls through your topologies and maps them. It reads basic MySQL info such as replication status and configuration.

- Refactoring – Orchestrator understands replication rules. It knows about binlog file:position and GTID. Moving replicas around is safe: orchestrator will reject an illegal refactoring attempt.

- Recovery – Based on information gained from the topology itself, Orchestrator recognizes a variety of failure scenarios. The recovery process utilizes the Orchestrator’s understanding of the topology and its ability to perform refactoring.

Orchestrator can successfully detect the primary failure and promote a new primary. The goal was to implement Orchestrator with HAProxy first and then eventually move to Orchestrator with ProxySQL.

Manual failover tests

In March of 2020 the DBRE team completed several manual/controlled fail-overs using Orchestrator and HAProxy. Eventbrite experienced some AWS hardware issues on the MySQL primary and completing manual failovers was the first big test. Orchestrator passed the tests with flying colors.

Automatic failover

In May of 2020 we enabled automatic fail-over for our production MySQL data stores. This is a big step forward in addressing the single-point-of-failure situation with our primary MySQL instance. The DBRE team also completed several rounds of testing in QA/Stage for ProxySQL in preparation for the move from HAProxy to ProxySQL.

Show time

In July 2020, Eventbrite experienced hardware failure on the primary MySQL instance that resulted in automatic failover. The new and improved automatic failover process via Orchestrator kicked in and we failed over to the new MySQL primary in ~20 seconds. The impact to the business was astronomically low!

ProxySQL

In August of 2020 we made the jump to ProxySQL across our production MySQL environments. ProxySQL is a proxy specially designed for MySQL. It allows the Eventbrite DBRE team to control database traffic and SQL queries that are issued against the databases. Some nice features include:

- Query caching

- Query Re-routing – to separate reads from writes

- Connection pool and automatic retry of queries

Also during this time period we began our AWS Aurora evaluation as we began our “Managed Databases” journey. Personally, I prefer to use the term “Database-as-a-Service (DBaaS)”. Amazon Aurora is a high-availability database that consists of compute nodes replicated across multiple availability zones to gain increased read scalability and failover protection. It’s compatible with MySQL which is a big reason why we picked it.

Schema Migration Tool

In September of 2020, we began testing a new Self-Service Database Migration Tool to provide interactive schema changes (invokes gh-ost behind the scene). It supports all “ALTER TABLE…”, “CREATE TABLE…”, or “DROP TABLE…” DDL statements.

It includes a UI where you can see the status of migrations and run them with the click of a button:

Any developer can file and run migrations, and a DBRE is only required to approve the DDL (this is all configurable though). Original source for the tool can be found here shift. We’ve not pushed to production yet but we do have a running version in our non-prod environment.

Database-as-a-Service (DBaaS) and next steps

We’re barreling down the “Database-as-a-Service (DBaaS)” path with Amazon Aurora.

DBaaS flips the DBRE role a bit by eliminating mundane operational tasks and allowing the DBRE team to align our work more closely with the business to derive value from the data assets that we manage. We can then focus on lending our DBA skills to application teams and end users—helping deliver new features, functionality, and proactive tuning value to the core business.

Stay tuned for updates via future blog posts on our migration to AWS Aurora! We’re very interested in the scaled read operations and increased availability that Aurora DB cluster provides. Buckle your seatbelt and get ready for a wild ride 🙂 I’ll provide an update on “Database-as-a-Service (DBaaS)” at Eventbrite in my next blog post!

All comments are welcome! You can message me at ed@eventbrite.com. Special thanks to Steven Fast for co-authoring this blog post.