I joined Eventbrite as their first Technical Fellow, the most senior engineering individual contributor role in the company. One of my initial goals was to come up with an overarching technical vision for the whole company aligned with our 3-year business strategy, and that would move us away from a monolithic architecture and central SRE team to a distributed system where we shift ownership to each team. In our most recent post, Vivek Sagi described the list of problems that we identified and our future-looking goals, which to recap are:

- Deliver reliable, high quality, cost effective software solutions to our creators and consumers that allows the business to grow revenue 5x by 2023.

- Enable autonomous dev teams that own their code and architecture. Provide these teams the platform, tooling, and access required to own end-to-end production support for their services.

- Improve dev team accountability to deliver against high level OKRs while giving them autonomy to decide on the path to get there.

- Drive automation and reduce toil. All feature dev teams should be able to apply 60% of their capacity to deliver new business value by 2023. This balance is an estimate based on best performing mature product teams that we have seen in our past experience.

- Establish an operational excellence bar. Deliver 99.99% uptime across all customer facing services.

To accomplish these goals, I started working with other engineers and product leaders to understand the history of our technical architecture and the challenges that we were facing including developer productivity issues, site reliability problems or scalability limitations. From these goals, we derived a set of requirements for our 3-year technical vision:

- Features. As our product offering evolves to deliver high quality self-service experiences for Super Creators and Consumers, we must ensure that our technology stack enables teams to efficiently create, optimize, and maintain the net new functionality we will need to provide. For example, Super Creators require multi-event creating/editing, organization level reporting, and multi-event cart support – all of which will require significant architectural changes relative to our current offering. In addition, a new bundle of marketing tools will enhance creators’ ability to acquire new audiences and grow existing ones, especially by leveraging automation and machine learning to simplify the experience while increasing the impact. We seek to improve our offering for consumers to discover, and attend events and to maintain trust in our platform.

- Leveraging Data. We have the opportunity to power new data differentiated products based on data from over a decade of past events and round out our focused product offering with key 3rd party integrations (e.g. Mailchimp, Zoom).

- Performance. User perception of our product’s performance is paramount: a slow product is a poor product. In addition, better page performance leads to better SEO rankings. We decided to leverage Lighthouse’s performance score, an industry standard web dev performance metric, and we endeavor to achieve a green score (90 to 100) across our customer facing features. We also must enforce low latency in our internal infrastructure and API response times, and set reduction goals year-over-year.

- Scale. We will support two types of scaling improvements. We will scale our systems to handle 5x the current load as we grow our business and we need to have systems that support this load and scale to such limits. The second one is related to spikiness in our traffic due to large event sales, where today we use a Waiting Room to throttle calls to our services and DB. We will design systems that can autoscale and descale in certain events and avoid having to overprovision our infrastructure on a manual basis.

- Quality. The defect rate of our product offering can either make or break the experience for our users. In the past year, we have reduced the quantity of critical open bugs from 311 down to 175 and also reduced the number of bugs that missed our fix SLA from 200 to 110. We should aggressively lean into this trend and continue to reduce both by 50% YoY. We will improve our ability to deliver along that trend by increasing our test coverage, reducing our code complexity, having better tooling and increasing our level of automation.

- Self-Service. We will improve self-service both externally and internally. For the former we will aim for a 50% YoY customer support contact rate reduction relative to total ticket sales, while ensuring that help center page views don’t disproportionately grow – the point being that we deliver product experiences that have sufficient in-line guidance to result in successful experiences. Internally we will ensure that data is accessible by teams, each of the data sources and services has clear documentation and runbooks as well as contracts and use cases. We will define these in “How We Work” guidelines that every team will follow.

- Development Process. Finally, we must streamline our internal development processes and progress along the DevOps Big 4 to these levels: Deployment frequency: Elite (Daily for web and backend services and up to weekly for native apps), Lead time for changes: Elite (Less than one hour), Mean time to restore service: Elite (Less than one hour), and Change failure rate: Elite (0-15%).

Applying these principles to the problems that were outlined in the previous post, we thought about the following solutions to them:

- Our monolith became a bottleneck to our developer velocity and overall site reliability and scalability. We need to decouple our monolith into smaller microservices that can evolve and scale independently. This is a similar trend that many other companies have followed as they grow, and based on our professional experience prior to Eventbrite, we know it works.

- Our initial partial attempt to move to a Services Oriented Architecture (SOA) compounded the problem. In our prior attempt, we lacked a clear vision of what moving to SOA meant and how to accomplish it. We moved business logic out but not data, compounding the problem. This time around, we’ve prioritized this architecture transition at a company level, focusing first on the core business logic, including segregating and migrating the underlying data with every service.

- Our performance became suboptimal leading to a poor utilization of our hardware resources. We planned to fix this in two ways: by moving to managed services, letting cloud providers deal with this responsibility, and choosing technologies that would autoscale properly based on our traffic patterns, which are spiky by nature due to large onsale events.

- Our SDLC process was ad hoc and lacked sufficient controls in a few places. We’ve defined and set ownership boundaries between services and logical components. We’ve also enacted Architecture Review Committees to review designs to ensure we are building extensible services that don’t become monolithic themselves.

- Given all the intricate moving parts to release the monolith, we trust our Site Reliability Engineers (SREs) to be the only ones who can coordinate all that infrastructure. We are transitioning to DevOps where each team is the owner of the end-to-end lifecycle of their services. Similar to an earlier point, we’ve implemented this successfully in the past at other companies and we know it works.

- We lack automation in how we test, deploy, monitor and roll back our code. Our vision document has sections specifically addressing deployments, testing and operations, indicating that we should aspire to full automation and minimize (and remove, if possible) any manual intervention.

- Our core “eb” database is not only monolithic but also mutable, and capturing historical changes has been challenging. We see this as an architectural issue where our data boundaries were never established and we had many different services reading from the same tables and writing to them. We also used the same database technology for all of our use cases which has proven to be inefficient.

- We also built homegrown tools such as our own RPC protocol, PySOA. We are no longer investing our time in areas that are not business critical and where we can’t build competitive differentiation. For everything else where we need a commoditized solution; evaluate buying instead of building whenever possible. This allows us to focus on providing customer value.

As we can see, we’re trying to move ownership from a centralized SRE team and monolithic architecture to empower teams to build and own their systems. But moving from a situation where the technology set for building features is very limited to another one which is much more open has its risks as well, and we didn’t want to end up with a technology spectrum so wide that it would be difficult to maintain. This is why we wrote our Golden Path, a living document that details the technologies that teams are allowed to use in production for their services, and covers areas such as RPC protocols, storage layers or programming languages. We say it’s a living document because teams are still encouraged to evaluate other technologies when designing their systems, and, if proven they’re the right choice, we’ll update our Golden Path to reflect these. We’ll write another post with more details about this Golden Path.

From an architecture perspective we also depicted a high level view of how we’d design our end system, starting from the client-facing applications and APIs:

And then describing the set of components that we would have in our internal network:

Our 3-year technical vision was a collaborative effort where the entire engineering team was involved. We reviewed the proposal multiple times with different stakeholders, including all engineers, data scientists, product managers and other roles in the company. We received hundreds of comments that enriched and made the whole proposal better. We hosted several Q&As to ensure that all aspects of the vision were clear and there were no outstanding items to be resolved. We also presented it to our CEO and the board of directors. We needed the entire company to become owners of this vision, and leaders in achieving it. After our 3-year technical vision was finalized, a few subsequent long-term thinking proposals were driven by our engineering organization, such as:

- Operational Model. We describe the infrastructure and networking that we’ll have to support our shift from centrally-owned infrastructure to a distributed mindset where each team owns the end-to-end lifecycle of their services.

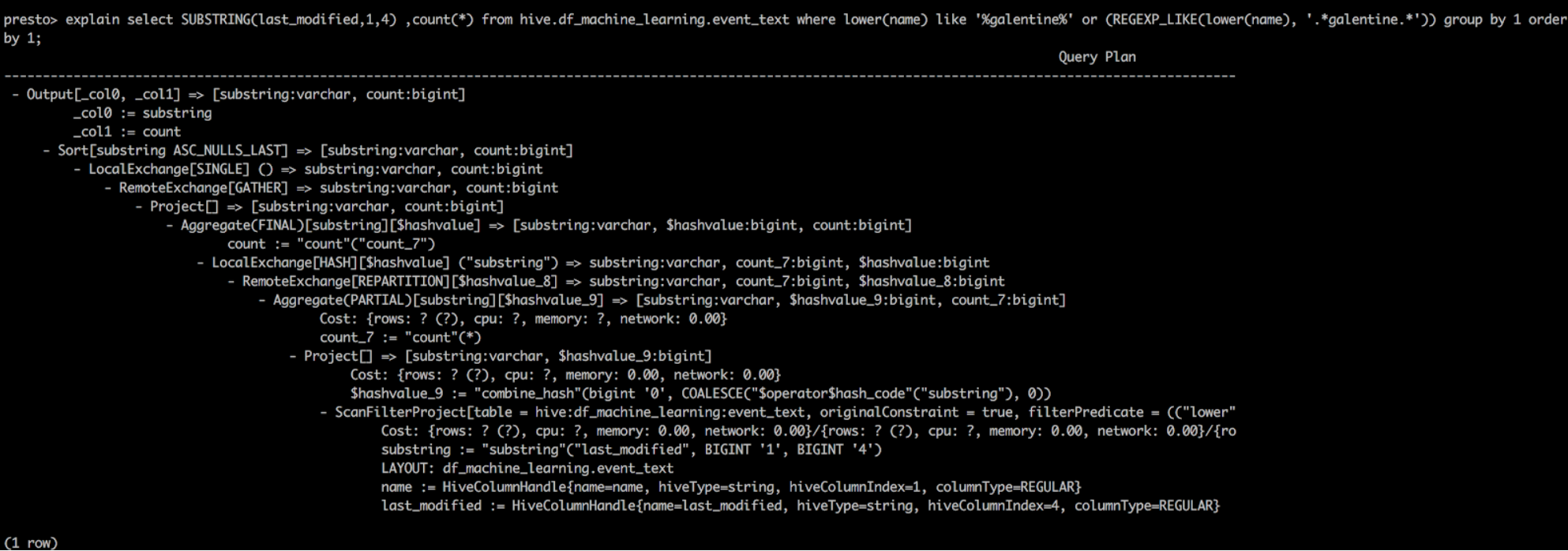

- Data. We describe our future internal and external reporting capabilities, and how these will work with a service oriented architecture where each service has its own storage layer, and not limited to a centralized MySQL DB. It also covers how to have a centralized data lake our data scientists can rely on to build their ML models.

- Frontend. We propose how to unify our frontend stack and extract our server side rendering from our monolith to Backend-for-Frontends for each application.

- Mobile. We are rethinking our integration with our core services and how to share logic between the different applications that we have today.

Apart from this, the roadmaps from all of our teams have been adapted to align with our vision and now include areas of focus such as moving away from the monolith into their own service, having their own storage layer, or moving from in-house technology to industry standards. This is also reflected in all recent technical proposals that have been written, all of which start by clarifying that what’s outlined in the proposal is in alignment with our 3-year technical vision and the Golden Path.

But writing a document and sharing that proposal was just the seed of the vision. We’re now making tangible progress to get there, such as:

- We have deprecated our in-house RPC protocol PySOA in favor of gRPC (tenet: we will choose conforming over creating/reforming). We did an initial evaluation where we compared PySOA with gRPC, and did a proof-of-concept to understand which would better suit our use cases. We decided to move to gRPC because it allows us to focus on our business needs instead of maintaining our own RPC protocol, and gRPC is superior since it supports HTTP2 (while PySOA relies on Redis), has a smaller payload size since it relies on protocol buffers and binary serialization, supports multiple programming languages instead of just Python, and has TLS/SSL support, among other advantages. We have also started writing new services using this new protocol.

- We are enabling self-service AWS account provisioning and defining our networking and security layers so that teams can own their service’s infrastructure (tenet: teams will have end-to-end ownership of their systems and services).

- We are migrating our unmanaged MySQL database to AWS Aurora (tenet: we favor cloud managed services or serverless for commoditized systems and components).

- We have worked on several long-term designs for some of our key components such as Ordering and Event Management instead of focusing on shorter-term and incremental improvements (tenet: we will favor long-term maintainability and scale over short-term deliveries for strategic solutions). We have also started their implementation and expect our initial deliveries later this year.

- We are writing new designs that break the previous limitation/guidance to only use Python/Django and MySQL and consider databases such as DynamoDB or QLDB, Kotlin or Go, and SNS or Kinesis, as a few examples (tenet: we will standardize on a few stacks but also empower teams to choose the right tool for the job).

- We have recently launched an Operational Readiness Review process to analyze the reliability of our current codebase, as well as new designs, are overhauling our Security Review process, moving to full CI/CD, Dockerizing our monolith, raising the bar in our testing and quality processes, and several other initiatives that we have in place (tenet: we will strive for continuous improvement and will ask why not instead of why?).

These are just a few examples that show how having a clearly outlined long-term technical direction can have a significant impact on an organization’s architecture and processes. We will detail many more of these examples for actual impact in upcoming posts.

We are excited about this new, long-term thinking technical vision that will provide the right guidance to our teams, indicate how the different pieces in our system should fit together, and help our every-day decision-making process. And what’s even more exciting is that the whole company participated in its definition and have embraced it with energy and passion.